AI in HR: A Peek Into the Future

Explore the evolving landscape of HR, offering a glimpse into how AI is poised to shape the future of human resources management.

The increased use of AI in the workplace has redesigned jobs, reshaped business processes, and raised some tough questions about ethics and oversight.

Today, employers face the challenge of decoding AI regulations that differ widely across jurisdictions while balancing innovation with compliance. Some guidance exists, but much remains unclear.

So, how can companies stay ahead when the rules are still being written?

Since taking office in January, President Trump has changed the direction of America’s AI policy.

Essentially, the new administration hopes to secure the United States’ position as a global AI leader – by reducing barriers to AI development.

One of Trump’s first executive actions was replacing Biden’s “Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence“ order with “Removing Barriers to American Leadership in Artificial Intelligence“ – a clear signal of a federal shift toward deregulation.

Yet, this federal pullback may have inadvertently created a more complex landscape for businesses.

The AI revolution is already here – with investments reaching $1 trillion and an explosion of AI-driven workplace tools that are transforming how we do business.

However, companies rushing to adopt these tools must be mindful of changing AI compliance laws and regulations.

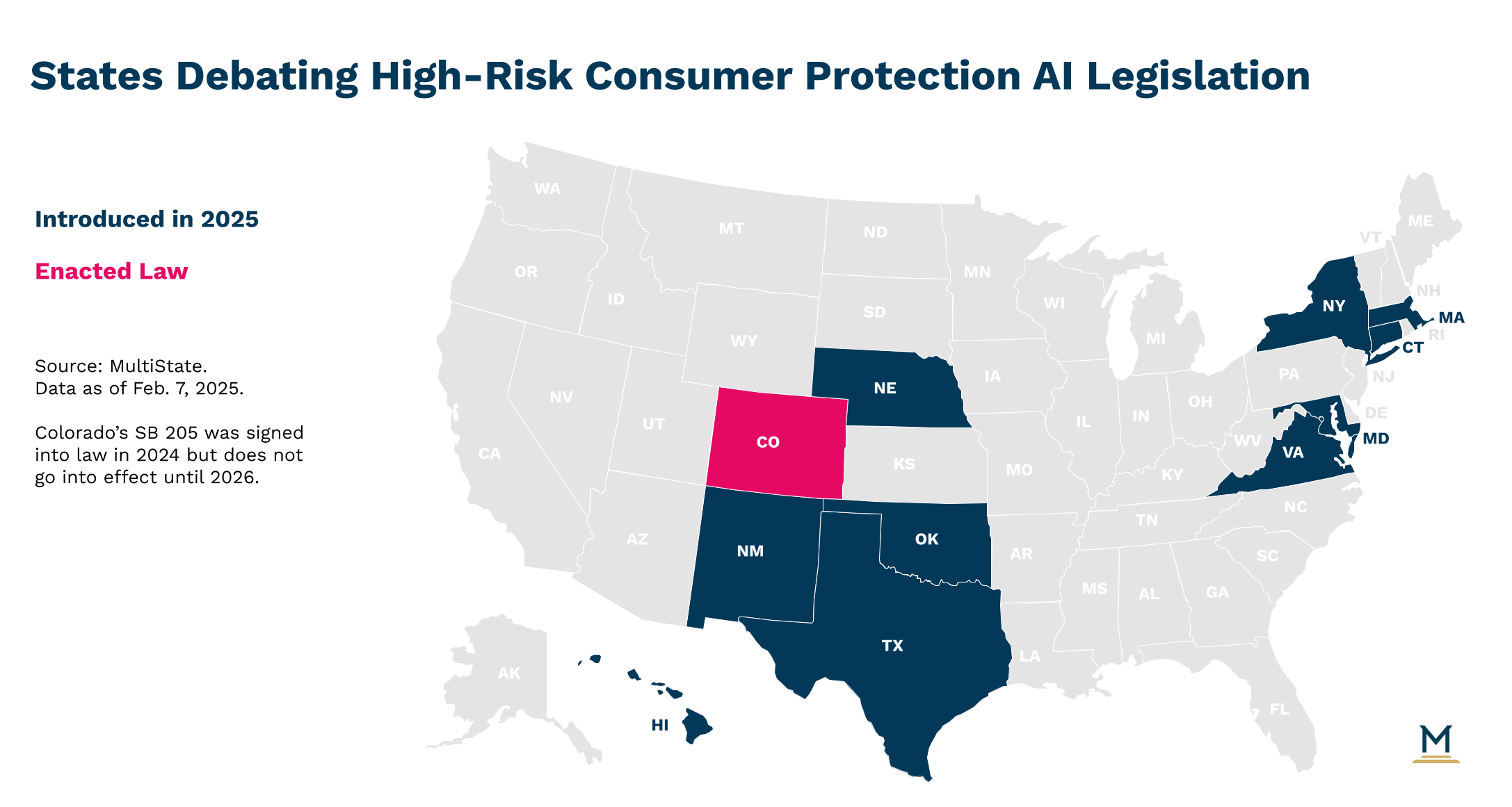

In 2024, lawmakers in 45 US states introduced nearly 700 bills related to artificial intelligence.

Out of those, 99 bills became law.

Across the country, 33 states have set up AI committees and task forces to examine AI’s impact, with their findings likely to guide future legislation.

In other words, with the recent removal of federal guardrails, many states are introducing their own regulations.

As federal oversight of AI loosens, state and local governments are filling the gap with regulations to protect fairness and ethics in the workplace.

Here’s a look at some recent developments in the regulatory landscape:

The first algorithmic discrimination law targets high-risk AI systems that influence “consequential decisions,” such as employment outcomes. Employers in Colorado using AI will need to:

It also gives workers the right to challenge unfavorable AI-generated outcomes, such as firings or demotions.

A similar bill targeting high-risk decisions was recently passed in Virginia and may potentially be effective July 1, 2026.

Employers will be forbidden from using AI in ways that discriminate based on protected classes under the Illinois Human Rights Act. Companies must alert employees if AI is used in hiring, promotions, or layoffs and are banned from using zip codes as proxies for protected characteristics.

Already in effect, this law requires employers to disclose if AI analyzes video job interviews, obtain candidate consent, delete recordings upon request, and report data to state agencies.

Employers using automated employment decision tools must provide advance notice to employees or job candidates, conduct annual independent bias audits, and publicly share audit results.

Despite the relaxed federal stance, employers must remain cautious in decoding and complying with AI regulations.

The lack of federal guidance does not change core anti-discrimination laws, such as Title VII of the Civil Rights Act and the Americans with Disabilities Act (ADA).

These laws and their state equivalents still apply.

Even though the EEOC and the Department of Labor have pulled back on AI guidance, existing laws still regulate AI use in hiring and workplace decisions.

Employers must be cautious with the following uses:

There is a growing recognition that employers must regularly monitor all their AI tools, especially those considered high-risk.

And as part of decoding AI regulation, it’s important to understand what qualifies as a high-risk AI system.

While AI has existed in some form for nearly 70 years, its role in everyday life, especially in business operations, is more frequent than ever.

When AI starts making decisions about who gets hired, promoted, or receives a pay raise, the stakes are high.

These are what experts call “high-risk AI systems” (HRAIS), where algorithms have the power to shape careers and livelihoods. When left unchecked, these systems can unintentionally perpetuate bias and lead to unfair hiring practices or discriminatory promotions.

That’s why regulations like Colorado’s AI Act are appearing, with similar bills targeting HRAIS being considered in ten other states, including Texas, Nebraska, and Oklahoma.

Overall, these HRAIS frameworks aim to define the responsibilities of AI developers and deployers while protecting consumers—or, in the workplace, employees—from algorithmic discrimination.

The reality is that businesses can’t afford to ignore AI.

It is a powerful tool that’s changing industries, but it can also become a liability without supervision.

Simply put, companies that overlook AI compliance today risk legal trouble and reputational damage in the future.

Yet, recent studies reveal that 57% of workplaces lack an AI use policy or are still in the process of creating one, while 17% of employees are unsure if such a policy even exists.

So, to stay ahead, employers need to evaluate their AI systems, adjust workforce strategies, and build a solid framework.

Who is responsible for AI?

Answering this and having lines of accountability is the foundation for managing AI responsibly. Companies, especially those that operate in multiple states, can work with experts and compliance services to create policies that guide how AI is developed and used.

The goal is simple: make sure AI decisions follow the law and reflect company values.

Organizations need codified principles – such as fairness, transparency, and accountability – to guide AI use.

In addition, companies building AI policies must proactively address bias in algorithms, ensure explainability for high-stakes decisions (like hiring or lending), and include human oversight in their systems.

As mentioned, thorough documentation is not just good for transparency; it’s becoming a legal requirement in many states.

For these reasons, employers should maintain detailed records of their own or their vendors’ AI model origins, training data sources, and intended use cases with the same precision they would apply to their tax records.

Forward-thinking companies are developing “model cards” and “datasheets” that document an AI model’s characteristics, limitations, and risks in language accessible to both technical and non-technical stakeholders.

While risk is an inherent part of innovation, it doesn’t have to be a blind spot.

Regular risk audits and “stress tests” for high-impact applications can help identify vulnerabilities before they escalate.

No matter how advanced your predictive analytics are, it’s difficult to predict the real-world challenges that can happen after deploying AI systems.

For this reason, it’s important to get feedback from internal teams, job candidates, and other third parties about their experiences with your AI processes.

Ultimately, AI systems should be treated as dynamic, evolving tools that require constant monitoring. Establish protocols to periodically review and test AI models to catch biases, inaccuracies, or unintended consequences.

In other words, employers should do both pre-deployment checks and, more importantly, post-deployment audits.

While AI can streamline work processes, it’s the skills that AI can’t replace – like empathy, critical thinking, and ethical judgment – that are at the center of fair and human-centered workplaces.

As new technology continues to shape our world, corporations are responsible for making sure it uplifts rather than undermines people.

Yet, as the number of companies adopting AI rises, so do lawsuits related to AI-driven discrimination.

For these reasons, businesses must ask the tough questions and address some core worker’s right challenges:

The role of AI in HR, and more broadly the workplace, requires thoughtful integration because true success shouldn’t come at the expense of workers.

In 2025, decoding AI regulation is more than a compliance exercise – it’s a commitment to ethical innovation.

In this new era of emerging regulatory standards, companies that build AI frameworks rooted in transparency, fairness, and accountability will be better positioned to avoid legal risks and protect their workforce.

Beyond compliance, ethical AI use is a competitive advantage.

It cultivates trust, strengthens company culture, and showcases leadership in a world where responsible AI practices define the future of work.

Embracing AI is no longer optional for companies, but adopting it responsibly and ethically is imperative for success.

Senior Content Writer at Shortlister

Browse our curated list of vendors to find the best solution for your needs.

Subscribe to our newsletter for the latest trends, expert tips, and workplace insights!

Explore the evolving landscape of HR, offering a glimpse into how AI is poised to shape the future of human resources management.

Explore the implications of technology on the inevitable shift of the labor market and what this would mean for the future of work.

With the rise of AI in the workplace, its impact has become multi-dimensional. While there is no doubt about its ability to reduce manual labor, this technology also reshapes job expectations, creating new opportunities and challenges.

Which skills are futureproofed with an irreplaceable “human touch,” and how are emerging technologies driving the evolution of workforce skills?

Used by most of the top employee benefits consultants in the US, Shortlister is where you can find, research and select HR and benefits vendors for your clients.

Shortlister helps you reach your ideal prospects. Claim your free account to control your message and receive employer, consultant and health plan leads.